A Deep Dive into QueryGPT: Uber’s AI-Powered SQL Generator

Uber’s QueryGPT is an advanced AI-powered system that translates natural language inputs into optimized SQL queries, significantly enhancing data accessibility and operational efficiency. Built upon Large Language Models (LLMs), vector databases, and retrieval-augmented generation (RAG), QueryGPT streamlines the SQL query-writing process by automating dataset selection, query structuring, and optimization.

The Problem with Manual SQL Querying

Uber processes approximately 1.2 million interactive queries monthly, with its Operations team contributing to a significant portion of these. Traditionally, writing an SQL query requires understanding table schemas, locating relevant datasets, and manually constructing the query, which could take 10 minutes or more per query. QueryGPT accelerates this process, reducing query generation time to just 3 minutes, dramatically improving efficiency and decision-making speed.

QueryGPT’s Architecture and Evolution

Initially developed during Uber’s Generative AI Hackdays in May 2023, QueryGPT started as a Retrieval-Augmented Generation (RAG) system that vectorized user queries, performed similarity searches against SQL samples and schemas, and guided LLMs in selecting the appropriate schema and tables.

Key Components of QueryGPT

To improve accuracy, efficiency, and cost-effectiveness, Uber enhanced QueryGPT with the following components:

- Workspaces: Structured repositories that classify SQL samples and database tables into business domains like Mobility, Core Services, and Ads. This ensures that query generation remains domain-specific and relevant.

- Intent Agents: These AI-driven agents categorize user queries based on intent and align them with predefined business domains, ensuring contextually accurate query generation.

- Table Agents: Responsible for table selection and validation, ensuring that the generated query references the correct datasets while reducing redundant joins.

- Column Prune Agents: Optimize schema inputs by removing irrelevant columns, keeping the token size manageable and reducing the computational cost of LLM queries.

How QueryGPT Works

QueryGPT’s workflow consists of multiple stages to ensure accuracy, efficiency, and reliability in SQL query generation:

1. Intent Recognition

Using vector-based semantic search, QueryGPT first interprets the natural language prompt and classifies it under an appropriate business domain. For example, a query related to ride cancellations would be mapped to the Mobility workspace.

2. Table Selection

Once the domain is identified, Table Agents perform similarity searches against Uber’s metadata store, selecting relevant tables based on historical SQL queries and predefined relationships. Users can override these selections to ensure accuracy.

3. Schema Optimization and Column Pruning

To prevent unnecessary data retrieval, Column Prune Agents analyze the selected tables and filter out irrelevant columns. This improves efficiency by reducing the token count passed to the LLM and minimizes computational overhead.

4. Query Generation with RAG

After preprocessing, QueryGPT retrieves relevant SQL examples from the workspace and feeds them, along with user inputs, into the LLM using Retrieval-Augmented Generation (RAG). The LLM then generates an SQL query while ensuring:

- Proper table joins and schema compatibility.

- Alignment with pre-existing query patterns to maintain consistency.

- Inclusion of aggregations, filters, and ordering where applicable.

5. Validation and Explanation

Once the query is generated, QueryGPT:

- Validates it against Uber’s metadata registry to ensure correctness.

- Provides an explanation of how the query was constructed, allowing users to tweak and refine it before execution.

Evaluation and Continuous Improvement

Uber has implemented a structured evaluation framework to ensure QueryGPT consistently delivers accurate and optimized SQL queries:

- Golden Question-to-SQL Mappings: A dataset of manually verified SQL queries is used as a benchmark to measure accuracy.

- Automated Metrics Tracking: QueryGPT evaluates intent accuracy, table selection reliability, and overall query quality through continuous monitoring and user feedback loops.

- Component-Level Auditing: Each stage of the query generation process is tracked independently, allowing Uber to refine and improve individual modules such as Intent Agents, Table Agents, and Column Prune Agents.

Real-World Impact and Future Potential

QueryGPT has already demonstrated tangible benefits at Uber, saving an estimated 140,000 hours per month in query-writing efforts. By automating complex SQL generation, it enables teams to focus on data-driven insights rather than spending time manually constructing queries.

As AI-powered query generation tools evolve, they are expected to play a pivotal role in the broader enterprise data ecosystem, making self-service data access more efficient and scalable.

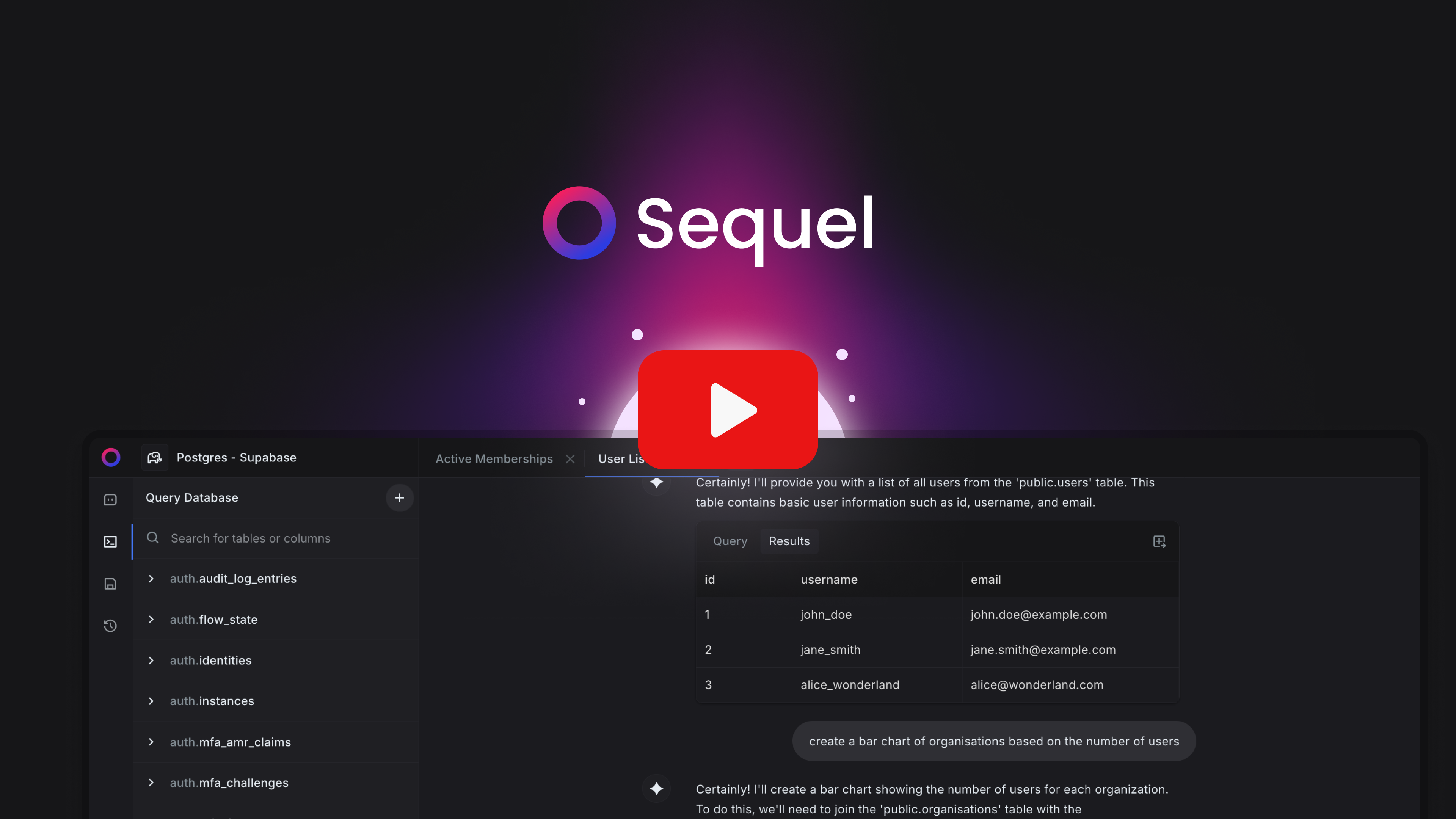

For businesses outside Uber seeking a robust text to SQL solution, Sequel.sh provides a powerful SaaS platform for converting all your natural language queries into SQL. Sequel.sh offers advanced data analysis, visualisation and ease of use for any team in your organisation without writing a single line of SQL.

How Sequel Enhances SQL Query Generation

Sequel offers several powerful features that simplify the SQL querying process:

- No Manual Schema Uploads: Automatically connects to your database, eliminating the need for manual schema dumps.

- Instant Query Execution: Queries are run immediately, and results are returned without extra steps.

- Error Correction: Automatically fixes SQL errors for a smoother experience.

- Data Visualization: Generates charts and graphs from query results for easier data analysis.

With these features, Sequel saves time and boosts productivity, making it an ideal solution for data teams.

Start exploring your data with Sequel

Save hours of time writing SQL queries. Get started for free.