SQL Query Optimization - Tips, Best Practices, Examples

As businesses handle increasing amounts of data, the efficiency of your SQL queries becomes critical. Poorly optimized queries can slow down your database, impacting both internal workflows and customer experience.

Whether you're aiming to speed up data retrieval for your team or ensure that users don’t face long waits, optimizing SQL queries is key.

In this blog, we'll cover essential tips to help you improve SQL query performance, ensuring faster results and more efficient database management.

What is SQL Query Optimization?

SQL query optimization refers to the process of refining and improving SQL queries to enhance their speed and performance. By using optimization techniques, databases can retrieve information more efficiently and with greater accuracy. Without proper optimization, executing queries can be slow and resource-intensive, leading to inefficiencies in accessing data.

Why SQL Query Optimization is Necessary

The primary aim of SQL query optimization is to minimize system resource usage while delivering accurate results quickly. This ensures that the code runs more efficiently, which is critical for maintaining optimal query performance. Key reasons to focus on SQL query optimization include:

- Boosting Performance: The primary goal of optimizing SQL queries is to reduce response time and improve overall performance. Minimizing the delay between sending a request and receiving data is crucial for enhancing user experience.

- Reducing Execution Time: Optimized queries significantly reduce the CPU time required for execution, enabling faster results. This leads to quicker website responses, avoiding noticeable delays.

- Improving Efficiency: Query optimization helps decrease the demand on hardware resources, allowing servers to run more efficiently with lower power and memory consumption.

10 Tips To Optimize SQL Queries for Better Performance

Optimizing SQL queries is essential to improve database performance, reduce execution time, and ensure efficient use of resources. Whether you're dealing with small datasets or large-scale databases, these 10 tips will help you fine-tune your queries for better performance.

1 Choose the Right Data Types for Optimal Performance

Selecting suitable data types for your database columns is crucial for query efficiency and data integrity. Proper data type selection not only improves query speed but also ensures accurate data representation and storage efficiency.

Consider a scenario where you're designing a database for a fitness-tracking app. You have a table to store user workout sessions with columns for user ID, workout date, duration (in minutes), and calories burned.

Choosing appropriate data types might look like this:

- User ID: INTEGER (assuming it's a numeric identifier)

- Workout Date: DATE

- Duration: SMALLINT (as workout durations are typically short)

- Calories Burned: DECIMAL(6,2) (for precise calorie counts with two decimal places)

Using these specific types instead of generic ones like VARCHAR can lead to several benefits:

- Improved Query Performance: When you use INT for User ID instead of VARCHAR, comparisons and joins become faster.

- Data Integrity: SMALLINT for duration prevents entering unreasonably large values, maintaining data quality.

- Storage Efficiency: SMALLINT for duration uses less space than INT or BIGINT, saving storage for large datasets.

- Accurate Calculations: DECIMAL for calories allows precise calculations without floating-point errors.

Remember, while it's crucial to choose suitable data types initially, it's also important to review and optimize them as your application evolves. Regularly analyze your query patterns and adjust data types if necessary to maintain peak performance.

2. Reduce Wildcard Usage for Enhanced Query Speed

Wildcard characters (% and _) in SQL queries can often lead to slower performance. When wildcards are used, especially at the start of a search pattern, the database may need to scan entire tables, which can be time-consuming for large datasets.

Let's look at a practical example. Suppose you're managing a library database and want to find all books with titles containing the word "Adventure". You might be tempted to write:

SELECT * FROM books WHERE title LIKE '%Adventure%';

While this query works, it forces a full table scan, which can be slow if you have thousands of books.

To optimize this, consider these alternatives:

1. If possible, use a more specific search:

SELECT * FROM books WHERE title LIKE 'Adventure%';

This still uses a wildcard but allows the database to use an index on the title column more effectively.

2. For more complex searches, consider using full-text search features if your database supports them. These are often faster than LIKE with wildcards. 3. If wildcards are necessary, try to combine them with other conditions to narrow down the search:

SELECT * FROM books

WHERE publish_year > 2000 AND title LIKE '%Adventure%';

Remember, while wildcards are sometimes necessary, they should be used thoughtfully.

When possible, favor non-wildcard conditions or use wildcards at the end of search terms rather than the beginning. This approach often allows better use of indexes and can significantly improve query performance.

By being strategic with wildcard usage, you can often achieve noticeable speed improvements in your SQL queries.

3. Minimize Subquery Usage for Better Performance

While subqueries can be useful, they often lead to slower query execution, especially when used in WHERE or HAVING clauses. To optimize your SQL queries, it's generally better to use JOINs or other techniques instead of subqueries when possible.

Let's consider a scenario where you're managing a library database. You want to find all authors who have written books published in the last year. A subquery approach might look like this:

SELECT * FROM authors

WHERE author_id IN (

SELECT author_id

FROM books

WHERE publication_year = YEAR(CURRENT_DATE) - 1

);

While this works, it can be inefficient, particularly for large datasets. The database might need to execute the subquery for each row in the authors table, leading to poor performance.

Instead, you can rewrite this using a JOIN:

SELECT DISTINCT a.*

FROM authors a

JOIN books b ON a.author_id = b.author_id

WHERE b.publication_year = YEAR(CURRENT_DATE) - 1;

This JOIN-based query is typically more efficient because it allows the database to optimize the operation better. It combines the tables first and then filters the results, often leading to faster execution.

Here are some general tips for dealing with subqueries:

- Look for opportunities to replace subqueries with JOINs.

- If a subquery is necessary, try to use it in the FROM clause rather than in WHERE or HAVING.

- For complex queries, consider using Common Table Expressions (CTEs) to improve readability and potentially performance.

- Use EXPLAIN or your database's query plan tool to compare the performance of different approaches.

4. Be Specific: Avoid Using SELECT *

Using SELECT * in your queries can negatively impact performance, especially when dealing with large tables or complex joins. This practice retrieves all columns from a table, including those you might not need, which can lead to unnecessary data transfer and processing.

Let's consider a scenario where you're working with an e-commerce database. You want to generate a report of recent product sales. Here's an example of how not to do it:

SELECT *

FROM sales s

JOIN products p ON s.product_id = p.product_id

WHERE s.sale_date >= DATE_SUB(CURRENT_DATE, INTERVAL 7 DAY);

This query retrieves all columns from both the sales and products tables, which could include unnecessary data like product descriptions, stock levels, or internal notes.

Instead, you should specify only the columns you need:

SELECT s.sale_id, s.sale_date, p.product_name, s.quantity, s.total_price

FROM sales s

JOIN products p ON s.product_id = p.product_id

WHERE s.sale_date >= DATE_SUB(CURRENT_DATE, INTERVAL 7 DAY);

This optimized query offers several benefits:

- Reduced Data Transfer: By selecting only necessary columns, you minimize the amount of data transferred between the database server and your application.

- Improved Query Speed: The database engine can optimize the query more effectively when it knows exactly which columns are needed.

- Lower Memory Usage: Your application will use less memory to store and process the results.

- Better Code Readability: Explicitly listing columns clarifies the data the query retrieves.

5. Using EXISTS Instead of IN for Improved Performance

When working with subqueries, using the IN operator can sometimes lead to performance issues, especially with large datasets. The IN operator often requires the database to perform a full scan of the subquery results. As an alternative, the EXISTS operator can often provide better performance.

Let's consider the same scenario as in previous sections - you're managing a library database. You want to find all authors who have written at least one book in a specific genre, say "Science Fiction". Here's how you might approach this using IN:

SELECT FROM authors

WHERE author_id IN (

SELECT author_id

FROM books

WHERE genre = 'Science Fiction'

);

While this query works, it might not be the most efficient approach, particularly if you have a large number of books or authors.

Instead, you can rewrite this query using EXISTS:

SELECT FROM authors a

WHERE EXISTS (

SELECT 1

FROM books b

WHERE b.author_id = a.author_id

AND b.genre = 'Science Fiction'

);

This EXISTS-based query often performs better because:

- Efficiency: EXISTS can stop searching as soon as it finds a match, whereas IN typically checks all values returned by the subquery.

- Optimization: Many database engines can optimize EXISTS queries more effectively than IN queries.

- NULL Handling: EXISTS handles NULL values more predictably than IN.

- Readability: In complex queries, EXISTS can sometimes express the intent more clearly.

However, it's important to note that the performance difference between IN and EXISTS can vary depending on your specific database system, data distribution, and query complexity. In some cases, IN might perform equally well or even better.

To ensure you're using the most efficient approach:

- Test both versions with your actual data and typical query patterns.

- Use your database's EXPLAIN feature to compare query execution plans.

- Consider factors like index usage and data volume when choosing between IN and EXISTS.

6. Leverage Stored Procedures for Efficiency

Stored procedures are pre-compiled SQL statements stored directly in the database. They offer several advantages for query performance:

- Reduced Network Traffic: Since only the procedure call is sent over the network, not the entire SQL statement, it minimizes data transfer between the application and database.

- Faster Execution: Pre-compilation means the database doesn't need to parse and optimize the SQL each time it's run.

- Security: Stored procedures can enhance security by limiting direct table access.

For example, instead of running multiple queries to update a user's profile, you could create a stored procedure:

CREATE PROCEDURE UpdateUserProfile

@UserID INT,

@Name VARCHAR(100),

@Email VARCHAR(100)

AS

BEGIN

UPDATE Users

SET Name = @Name, Email = @Email

WHERE UserID = @UserID

END

This procedure can then be called with a simple command, potentially improving performance and reducing code complexity.

7. Optimize Database Design for Performance

A well-designed database is crucial for query performance. Consider these aspects:

- Normalization: Properly normalize your tables to reduce data redundancy and improve data integrity. However, be aware that over-normalization can lead to complex joins, potentially impacting performance.

- Indexing Strategy: Create indexes on columns frequently used in WHERE clauses and joins. But remember, too many indexes can slow down write operations.

- Partitioning: For very large tables, consider partitioning to improve query performance and manageability.

Regularly review your database design as your application evolves. What works well for a small dataset might not be optimal as your data grows.

8. Minimize Large Write Operations

Large-scale data modifications can significantly impact database performance and potentially disrupt other operations. Writing, modifying, or deleting substantial volumes of data may slow down queries, block tables, and increase log file sizes. To maintain optimal performance during these operations, consider the following strategies:

- Use filegroups to spread data across multiple disks, enabling parallel processing.

- Implement data partitioning to focus write operations on specific segments of large tables.

- Apply compression to reduce I/O and improve write speed.

- Break large operations into smaller batches to reduce table blocking and impact on concurrent queries.

- Consider minimal logging for bulk inserts where appropriate.

- Schedule extensive write operations during off-peak hours when possible.

9. Avoid Negative Searches in WHERE Clauses

Negative searches, such as using NOT EQUALS (<>) or NOT LIKE in WHERE clauses, can significantly slow down query execution. While these operators are sometimes necessary, they often lead to suboptimal performance. Here's why you should minimize their use:

- Full table scans: Negative searches often require the database to scan the entire table, as they can't efficiently use indexes.

- Slower result retrieval: Positive searches can return results as soon as matching data is found, while negative searches must check all rows to ensure no matches exist.

- Index inefficiency: Many database optimizers struggle to use indexes effectively with negative conditions.

Instead, consider these alternatives:

- Rewrite queries to use positive conditions when possible.

- Use range conditions (> or <) instead of NOT EQUALS.

- For NOT IN clauses, consider using LEFT JOIN or NOT EXISTS.

- If negative searches are unavoidable, ensure appropriate indexes are in place to support them.

For instance, instead of:

SELECT FROM employees WHERE department <> 'Sales'

Consider:

SELECT FROM employees WHERE department IN ('HR', 'Marketing', 'Finance', ...)

By minimizing negative searches, you can often achieve significant improvements in query performance, especially for large datasets.

10. Utilize Query Optimization Tools

Query optimization tools can be invaluable in identifying and resolving performance issues. Most major database systems come with built-in tools:

- SQL Server: Use Query Store and Database Engine Tuning Advisor.

- Oracle: Utilize SQL Tuning Advisor and EXPLAIN PLAN.

- MySQL: Employ EXPLAIN and the Performance Schema.

These tools can help you:

- Identify slow-running queries

- Suggest missing indexes

- Provide alternative query plans

For instance, using EXPLAIN in MySQL:

EXPLAIN SELECT FROM Orders WHERE CustomerID = 1234;

This command shows how MySQL executes the query, helping you understand if it's using indexes effectively or if table scans are occurring.

But....What If You Could Query Your Database Without Actually Writing (And Optimizing) SQL Queries?

In the past, data analysis was primarily the responsibility of specialized IT or data teams who handled everything from data extraction to report generation. These centralized processes were time-consuming, and only a select few had access to the insights. As the demand for faster, more agile decision-making has grown, traditional tools have struggled to keep up.

Today, businesses need real-time insights that can be accessed by anyone across the organization, not just the data experts.

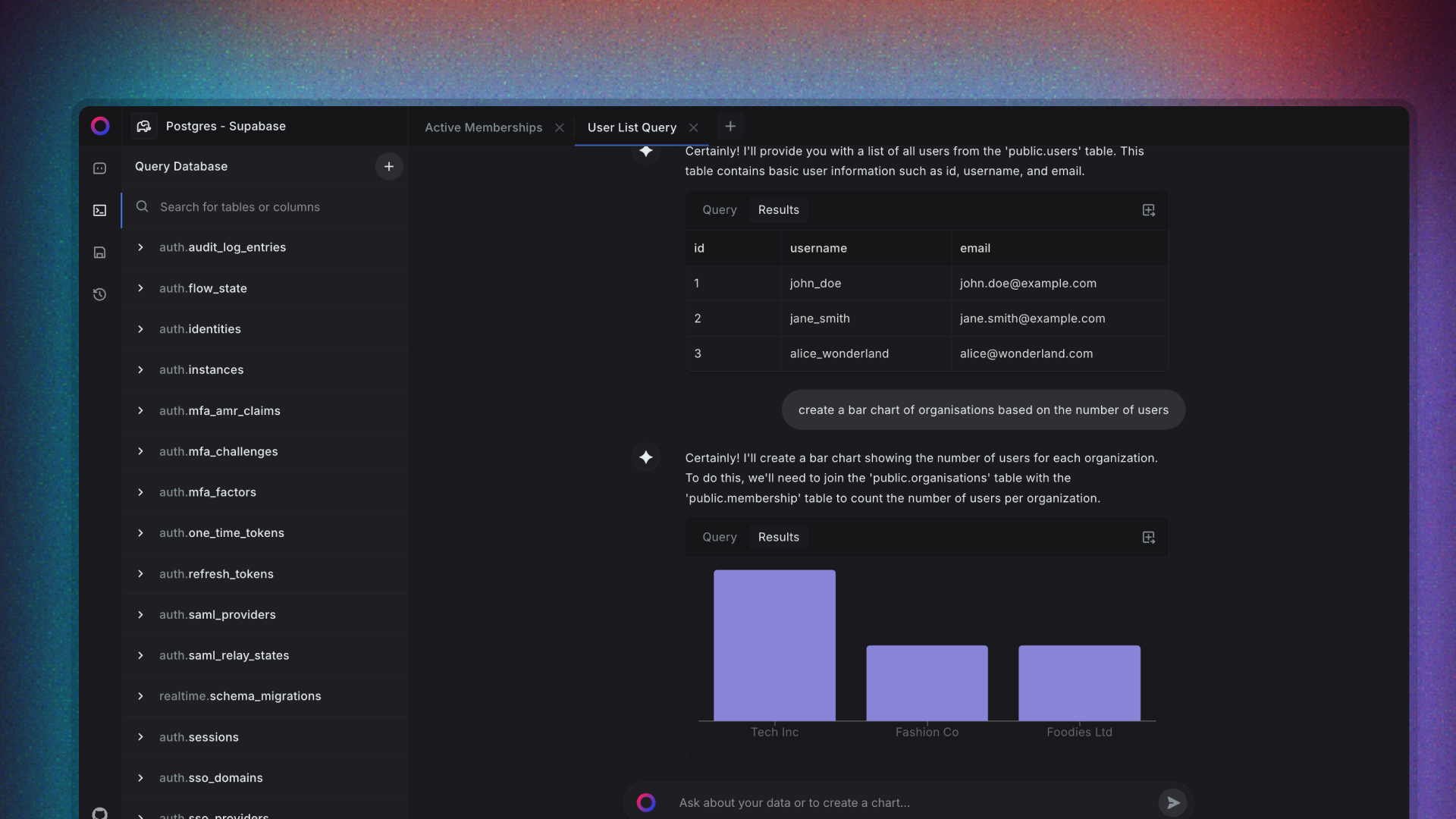

Enter Sequel, a modern, user-friendly data solution designed to simplify the way businesses interact with their data.

With Sequel, you can:

- Ask questions in plain English.

- Have an AI assistant uncover hidden insights in your data

- Get real-time insights without writing SQL.

- Visualize your data effortlessly.

Start exploring your data with Sequel

Save hours of time writing SQL queries. Get started for free.